CSV File Input

![]() PLEASE NOTE: This documentation applies to an earlier version. For the most recent documentation, visit the Pentaho Enterprise Edition documentation site.

PLEASE NOTE: This documentation applies to an earlier version. For the most recent documentation, visit the Pentaho Enterprise Edition documentation site.

Description

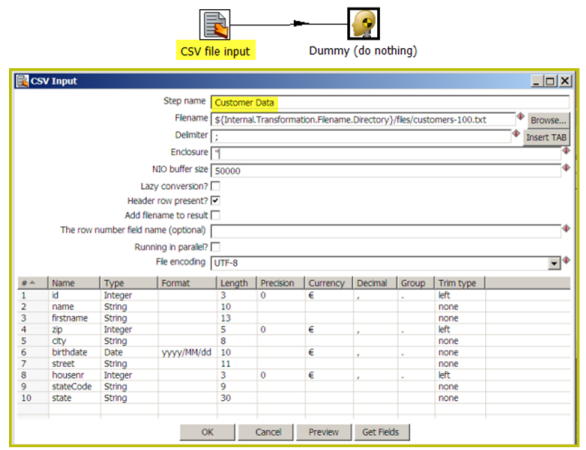

This step provides the ability to read data from a delimited file. The CSV label for this step is a misnomer because you can define whatever separator you want to use, such as pipes, tabs, and semicolons; you are not constrained to using commas. Internal processing allows this step to process data quickly. Options for this step are a subset of the Text File Input step. An example of a simple CSV Input transformation can be found under

...\samples\transformations\CSV Input - Reading customer data.ktr.

This step has fewer overall options than the general Text File Input step, but it has a few advantages over it:

- NIO -- Native system calls for reading the file means faster performance, but it is limited to only local files currently. No VFS support.

- Parallel running -- If you configure this step to run in multiple copies or in clustered mode, and you enable parallel running, each copy will read a separate block of a single file allowing you to distribute the file reading to several threads or even several slave nodes in a clustered transformation.

- Lazy conversion -- If you will be reading many fields from the file and many of those fields will not be manipulate, but merely passed through the transformation to land in some other text file or a database, lazy conversion can prevent Kettle from performing unnecessary work on those fields such as converting them into objects such as strings, dates, or numbers.

For information on valid date and numeric formats used in this step, view the Date Formatting Table and Number Formatting Table.

Options

The table below describes the options available for the CSV Input step:

Option | Description |

|---|---|

Step name | Name of the step.

|

Filename | Specify the name of the CSV file to read from. |

Delimiter | Specify the file delimiter character used in the target file. Special characters (e.g. CHAR HEX01) can be set with the format $[value], e.g. $[01] or $[6F,FF,00,1F]. |

Enclosure | Specify the enclosure character used in the target file. Special characters (e.g. CHAR HEX01) can be set with the format $[value], e.g. $[01] or $[6F,FF,00,1F]. |

NIO buffer size | This is the size of the read buffer. It represents the amount of bytes that is read in one time from disk. |

Lazy conversion | The lazy conversion algorithm will try to avoid unnecessary data type conversions and can result in a significant performance improvements if this is possible. The typical example that comes to mind is reading from a text file and writing back to a text file. |

Header row present? | Enable this option if the target file contains a header row containing column names. |

Add filename to result | Adds the CSV filename(s) read to the result of this transformation. A unique list is being kept in memory that can be used in the next job entry in a job, for example in another transformation. |

The row number field name (optional) | The name of the Integer field that will contain the row number in the output of this step. |

Running in parallel? | Check this box if you will have multiple instances of this step running (step copies) and if you want each instance to read a separate part of the CSV file(s).

|

File Encoding | Specify the encoding of the file being read. |

Fields Table | This table contains an ordered list of fields to be read from the target file. |

Preview button | Click to preview the data coming from the target file. |

Get Fields button | Click to return a list of fields from the target file based on the current settings (i.e. Delimiter, Enclosure, etc.). All fields identified will be added to the Fields Table. |

Metadata Injection Support

You can use the Metadata Injection supported fields with ETL Metadata Injection step to pass metadata to your transformation at runtime. The following Option and Value fields of the CSV File Input step support metadata injection:

- Options: Filename, Delimiter, Enclosure, NIO Buffer Size, Lazy Conversion, Header Row Present?, Add Filename to Result, The Row Number Field Name, Running in Parallel?, and File Encoding

- Values: Name, Length, Decimal, Type, Precision, Group, Format, Currency, and Trim Type