Hadoop Job Executor

Hadoop Job Executor

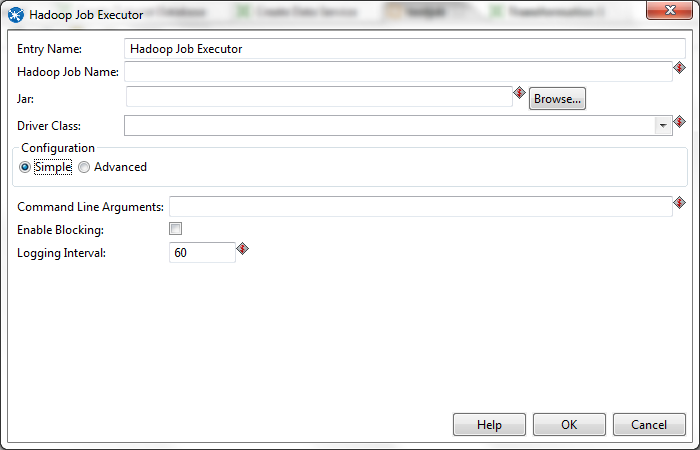

This job entry executes Hadoop jobs on a Hadoop node. There are two option modes: Simple (the default condition), in which you only pass a premade Java JAR to control the job; and Advanced, in which you are able to specify static main method parameters. Most of the options explained below are only available in Advanced mode. The User Defined tab in Advanced mode is for Hadoop option name/value pairs that are not defined in the Job Setup and Cluster tabs.

General

Option |

Definition |

|---|---|

Name |

The name of this Hadoop Job Executer step instance. |

Hadoop Job Name |

The name of the Hadoop job you are executing. |

Jar |

The Java JAR that contains your Hadoop mapper and reducer job instructions in a static main method. |

Command line arguments |

Any command line arguments that must be passed to the static main method in the specified JAR. |

Job Setup

Option |

Definition |

|---|---|

Output Key Class |

The Apache Hadoop class name that represents the output key's data type. |

Output Value Class |

The Apache Hadoop class name that represents the output value's data type. |

Mapper Class |

The Java class that will perform the map operation. Pentaho's default mapper class should be sufficient for most needs. Only change this value if you are supplying your own Java class to handle mapping. |

Combiner Class |

The Java class that will perform the combine operation. Pentaho's default combiner class should be sufficient for most needs. Only change this value if you are supplying your own Java class to handle combining. |

Reducer Class |

The Java class that will perform the reduce operation. Pentaho's default reducer class should be sufficient for most needs. Only change this value if you are supplying your own Java class to handle reducing. If you do not define a reducer class, then no reduce operation will be performed and the mapper or combiner output will be returned. |

Input Path |

The path to your input file on the Hadoop cluster. |

Output Path |

The path to your output file on the Hadoop cluster. |

Input Format |

The Apache Hadoop class name that represents the input file's data type. |

Output Format |

The Apache Hadoop class name that represents the output file's data type. |

Cluster

Option |

Definition |

|---|---|

HDFS Hostname |

Hostname for your Hadoop cluster. |

HDFS Port |

Port number for your Hadoop cluster. |

Job Tracker Hostname |

If you have a separate job tracker node, type in the hostname here. Otherwise use the HDFS hostname. |

Job Tracker Port |

Job tracker port number; this cannot be the same as the HDFS port number. |

Number of Mapper Tasks |

The number of mapper tasks you want to assign to this job. The size of the inputs should determine the number of mapper tasks. Typically there should be between 10-100 maps per node, though you can specify a higher number for mapper tasks that are not CPU-intensive. |

Number of Reducer Tasks |

The number of reducer tasks you want to assign to this job. Lower numbers mean that the reduce operations can launch immediately and start transferring map outputs as the maps finish. The higher the number, the quicker the nodes will finish their first round of reduces and launch a second round. Increasing the number of reduce operations increases the Hadoop framework overhead, but improves load balancing. If this is set to 0, then no reduce operation is performed, and the output of the mapper will be returned; also, combiner operations will also not be performed. |

Enable Blocking |

Forces the job to wait until each step completes before continuing to the next step. This is the only way for PDI to be aware of a Hadoop job's status. If left unchecked, the Hadoop job is blindly executed, and PDI moves on to the next step. Error handling/routing will not work unless this option is checked. |

Logging Interval |

Number of seconds between log messages. |

Hadoop Cluster

The Hadoop cluster configuration dialog allows you to specify configuration detail such as host names and ports for HDFS, Job Tracker, and other big data cluster components, which can be reused in transformation steps and job entries that support this feature.Option | Definition |

|---|---|

Cluster Name | Name that you assign the cluster configuration. |

Use MapR Client | Indicates that this configuration is for a MapR cluster. If this box is checked, the fields in the HDFS and JobTracker sections are disabled because those parameters are not needed to configure MapR. |

Hostname (in HDFS section) | Hostname for the HDFS node in your Hadoop cluster. |

Port (in HDFS section) | Port for the HDFS node in your Hadoop cluster. |

Username (in HDFS section) | Username for the HDFS node. |

Password (in HDFS section) | Password for the HDFS node. |

Hostname (in JobTracker section) | Hostname for the JobTracker node in your Hadoop cluster. If you have a separate job tracker node, type in the hostname here. Otherwise use the HDFS hostname. |

Port (in JobTracker section) | Port for the JobTracker in your Hadoop cluster. Job tracker port number; this cannot be the same as the HDFS port number. |

Hostname (in ZooKeeper section) | Hostname for the Zookeeper node in your Hadoop cluster. |

Port (in Zookeeper section) | Port for the Zookeeper node in your Hadoop cluster. |

URL (in Oozie section) | Field to enter an Oozie URL. This must be a valid Oozie location. |