![]() PLEASE NOTE: This documentation applies to Pentaho 7.1 and earlier. For Pentaho 8.0 and later, see HBase Input on the Pentaho Enterprise Edition documentation site.

PLEASE NOTE: This documentation applies to Pentaho 7.1 and earlier. For Pentaho 8.0 and later, see HBase Input on the Pentaho Enterprise Edition documentation site.

Description

This step reads data from an HBase table according to user-defined column metadata.

Options

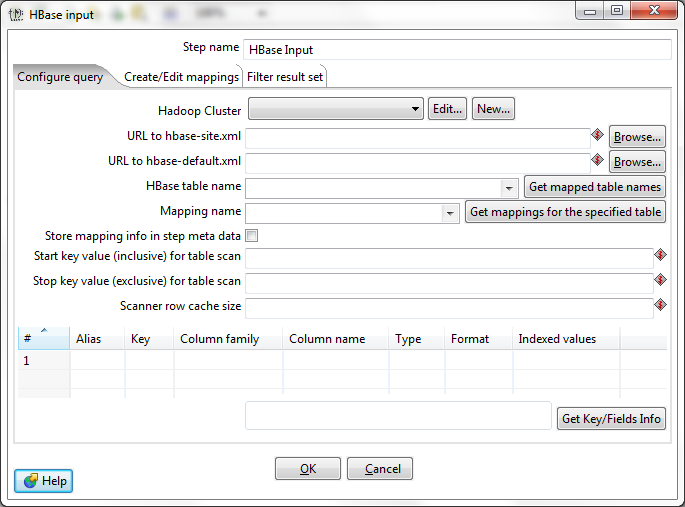

Configure Query Tab

This tab contains connection details and basic query information. You can configure a connection in one of two ways: using the Hadoop cluster properties, or by using an hbase-site.xml and (an optional) hbase-default.xml configuration file.

Below the connection details are fields to specify the source HBase table to read from and a mapping by which to decode and interpret column values. Before a value can be read from HBase it is necessary to tell the step which column family it belongs to and what its type is. Furthermore, it is necessary to specify type information about the key of the table. This is where a mapping comes into play and the user must define a mapping to use for a given source table.

Below the Mapping name text field are fields to limit a query on the source table to a range of key values. The middle of the tab is dominated by a list of fields (as defined in the selected mapping) that the step will output. The user can opt to output all or some of the fields defined in the mapping. Rows from the table may be deleted to select a subset of the fields. Clearing all rows from the table indicates that all fields defined in the mapping should be output

At the very bottom of the tab information on the HBase key field is given. This shows the name of the Key (as defined in the mapping) along with its type in the HBase table.

Option | Definition |

|---|---|

Step name | The name of this step as it appears in the transformation workspace. |

Hadoop Cluster | Allows you to create, edit, and select a Hadoop cluster configuration for use. Hadoop cluster configurations settings can be reused in transformation steps and job entries that support this feature. In a Hadoop cluster configuration, you can specify information like host names and ports for HDFS, Job Tracker, and other big data cluster components. The Edit button allows you to edit Hadoop cluster configuration information. The New button allows you to add a new Hadoop cluster configuration. Information on Hadoop clusters can be found in Pentaho Help. |

URL to hbase-site.xml | Address of the |

URL to hbase-default.xml | Address of the |

HBase table name | The source HBase table to read from. Click Get Mapped Table Names to populate the drop-down list of possible table names. |

Mapping name | A mapping to decode and interpret column values. Click Get Mappings For the Specified Table to populate the drop-down list of available mappings. |

Store mapping info in step meta data | Indicates whether to store mapping information in the step's meta data instead of loading it from HBase when it runs. |

Start key value (inclusive) for table scan | A starting key value to retrieve rows from. This is inclusive of the value entered. |

Stop key value (exclusive) for table scan | A stopping key value for the scan. This is exclusive of the value entered. Both fields or the stop key field may be left blank. If the stop key field is left blank, then all rows from (and including) the start key will be returned. |

Scanner row cache size | The number of rows that should be cached each time a fetch request is made to HBase. Leaving this blank uses the default, which is to perform no caching; one row would be returned per fetch request. Setting a value in this field will increase performance (faster scans) at the expense of memory consumption. |

# | The order of query limitation fields. |

Alias | The name that the field will be given in the output stream. |

Key | Indicates whether the field is the table's key field or not. |

Column family | The column family in the HBase source table that the field belongs to. |

Column name | The name of the column in the HBase table (family + column name uniquely identifies a column in the HBase table). |

Type | The PDI data type for the field. |

Format | A formatting mask to apply to the field. |

Indexed values | Indicates whether the field has a predefined set of values that it can assume. |

Get Key/Fields Info | Assuming the connection information is complete and valid, this button will populate the field list and display the name of the key. |

Hadoop Cluster

The Hadoop cluster configuration dialog allows you to specify configuration detail such as host names and ports for HDFS, Job Tracker, and other big data cluster components, which can be reused in transformation steps and job entries that support this feature.

Option | Definition |

|---|---|

Cluster Name | Name that you assign the cluster configuration. |

Use MapR Client | Indicates that this configuration is for a MapR cluster. If this box is checked, the fields in the HDFS and JobTracker sections are disabled because those parameters are not needed to configure MapR. |

Hostname (in HDFS section) | Hostname for the HDFS node in your Hadoop cluster. |

Port (in HDFS section) | Port for the HDFS node in your Hadoop cluster. |

Username (in HDFS section) | Username for the HDFS node. |

Password (in HDFS section) | Password for the HDFS node. |

Hostname (in JobTracker section) | Hostname for the JobTracker node in your Hadoop cluster. If you have a separate job tracker node, type in the hostname here. Otherwise use the HDFS hostname. |

Port (in JobTracker section) | Port for the JobTracker in your Hadoop cluster. Job tracker port number; this cannot be the same as the HDFS port number. |

Hostname (in ZooKeeper section) | Hostname for the Zookeeper node in your Hadoop cluster. |

Port (in Zookeeper section) | Port for the Zookeeper node in your Hadoop cluster. |

URL (in Oozie section) | Field to enter an Oozie URL. This must be a valid Oozie location. |

There are two fields by which the range of key values returned by a table scan can be limited. Leaving both blank will result in all rows being retrieved from the source table. The Start key field allows the user to enter a starting key value from which to retrieve rows. This is inclusive of the value entered. The Stop key field allows a stopping key value for the scan to be entered. This is exclusive of the value entered. Both fields or the stop key field may be left blank. If the stop key field is left blank then all rows from (and including) the start key will be returned.

A formatting string must be provided for date values involved in a range scan (and optionally for numbers). There are two ways to provide this information in the dialog:

- If the user has configured the step with fields from the mapping in question (i.e. the fields table in the Configure query tab is not completely blank), and the key is included in the fields to be output from the step, then a formatting string may be entered into the Format cell in the row corresponding to the key field.

- If the user has not opted to output the key from the step, or, the user has opted to output all fields in the mapping by leaving the fields table in the Configure query tab blank, then formatting information can be supplied for start and stop key values independently by suffixing the start or stop key value with the formatting string following a '@' separator character. For example, a date start key value of 1969-08-28 can be specified as:

1969-08-28@yyyy-MM-dd

Beneath the Stop key field is a text field that allows the user to specify how many rows should be cached each time a fetch request is made to HBase. Leaving this blank uses the default, which is to perform no caching - i.e. one row returned per fetch request. Setting a value in this field will increase performance (faster scans) at the expense of memory consumption.

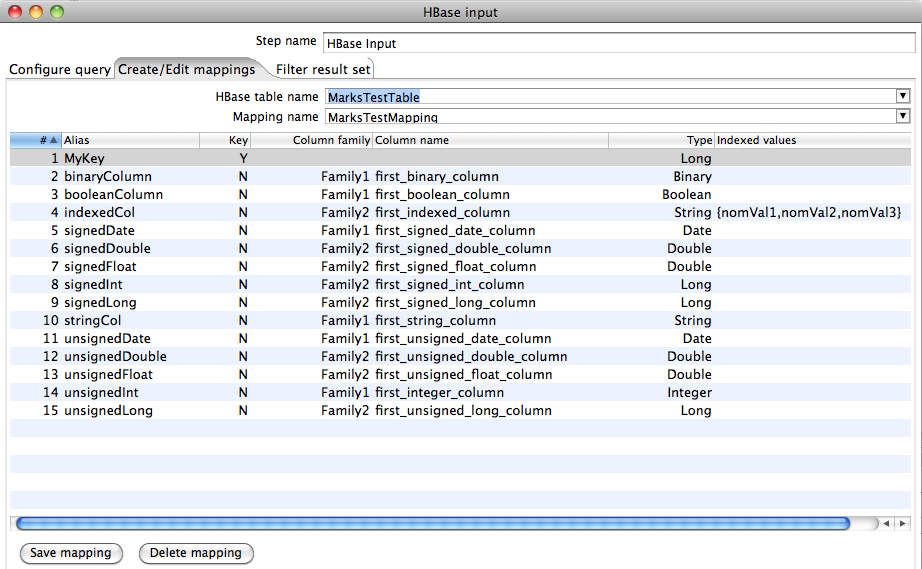

Create/Edit Mappings Tab

This tab creates or edits a mapping for a given HBase table. A mapping simply defines metadata about the values that are stored in the table. Since most information is stored as raw bytes in HBase, this enables PDI to decode values and execute meaningful comparisons for column-based result set filtering. The fields area of the tab can be used to enter information about the columns in the HBase table that the user wants to map. Selecting the name of an existing mapping will load the fields defined in that mapping into the fields area of the display.

A valid mapping must define meta data for at least the key of the source HBase table. The key must have an Alias specified because there is no name given to the key of an HBase table. Non-key columns must specify the Column family that they belong to and the Column name. An Alias is optional - if not supplied then the column name is used. All fields must have type information supplied.

Option | Definition |

|---|---|

HBase table name | Displays a list of table names. Connection information in the previous tab must be valid and complete in order for this drop-down list to populate. Selecting a table here will populate the Mapping name drop-down box with the names of any mappings that exist for the table. |

Mapping name | Names of any mappings that exist for the table. This box will be empty if there are no mappings defined for the selected table, in which case you can enter the name of a new mapping. |

# | The order of the mapping operation. |

Alias | The name you want to assign to the HBase table key. This is required for the table key column, but optional for non-key columns. |

Key | Indicates whether or not the field is the table's key. |

Column family | The column family in the HBase source table that the field belongs to. Non-key columns must specify a column family and column name. |

Column name | The name of the column in the HBase table. |

Type | Data type of the column. Key columns can be of type: String Integer Unsigned integer (positive only) Long Unsigned long (positive only) Date Unsigned date. |

Indexed values | String columns may optionally have a set of legal values defined for them by entering comma-separated data into this field. |

To enable meaningful range scans over key values it is necessary for keys to sort properly in HBase. This is why we make a distinction between unsigned and signed numbers. Since HBase stores integers/longs in twos complement internally it is necessary to flip the sign bit before storing a signed number in order to have positive numbers sort after negative ones. Unsigned integers/longs are assumed to have been stored directly without without inverting the sign bit. Note that keys that are dates are assumed to have been stored as just signed or unsigned longs (i.e. number of milliseconds elapsed since the epoch). If you have a key that is date in string format (ISO 8601 date-time format is designed such that lexicographic order corresponds to chronological order) it can be mapped as type String and then PDI can be used to change the type to Date for manipulation in the transformation.

No distinction is made between signed and unsigned numbers here because no sorting is performed by HBase on anything other than the key. Boolean values may be stored in HBase as 0/1 integer/long or as strings (Y/N, yes/no, true/false, T/F). BigNumber may be stored as either a serialized BigDecimal object or in string form (i.e. a string that can be parsed by BigDecimal's constructor). Serializable is any serialized Java object and Binary is a raw array of bytes. String columns may optionally have a set of legal values defined for them by entering comma-separated data into the Indexed values column in the fields table.

Pressing the Save mapping button will save the mapping. The user will be alerted to any missing information in the mapping definition and prompted to rectify it before the mapping is actually saved. Note that it is possible to define multiple mappings - involving different subsets of columns - on the same HBase table. The Delete mapping button allows the current named mapping for the current named table to be deleted from the mapping table. Note that this does not delete the actual HBase table in question.

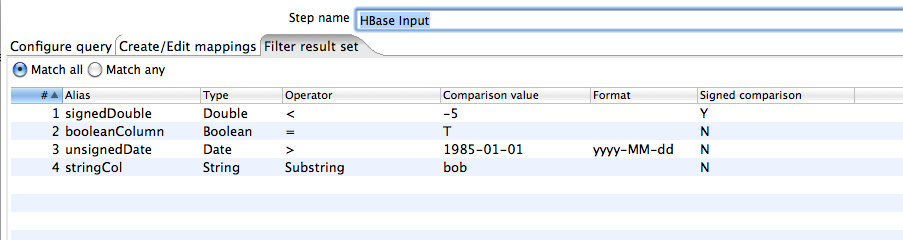

Filter Result Set Tab (Refining a Query Based on Column Values)

Within a range scan it is possible to further refine the set of rows returned by specifying filtering operations on the values of columns other than the key. The Filter result set tab allows the user to enter row filtering tests against one or more columns defined in the mapping.

Option | Definition |

|---|---|

Match all / Match any | When multiple column filters have been defined, you have the option returning only those rows that match all filters, or any single filter. Bounded ranges on a single numeric column can be defined by defining two filters (upper and lower bounds) and selecting Match all; similarly, open-ended ranges can be defined by selecting Match any. |

# | The order of the filter operation. |

Alias | A drop-down box of column alias names from the mapping. |

Type | Data type of the column. This is automatically populated when you select a field after choosing the alias. |

Operator | A drop-down box that contains either equality/inequality operators for numeric, date, and boolean fields; or substring and regular expression operators for string fields. |

Comparison value | A comparison constant to use in conjunction with the operator. |

Format | A formatting mask to apply to the field. |

Signed comparison | Specifies whether or not the comparison constant and/or field values involve negative numbers (for non-string fields only). If field values and comparison constants are only positive for a given filter, then HBase's native lexicographical byte-based comparisons are sufficient. If this is not the case, then it is necessary for column values to be deserialized from bytes to actual numbers before performing the comparison. |

NOTE: For exactly the same reasons as with the table key - two's complement is used internally which makes negative numbers bigger than positive ones when compared lexicographically according to their bytes). HBase Input ships with a custom comparator for deserializing column values before performing a comparison. This needs to be installed on each HBase node before signed comparisons will work correctly. Similarly, a special comparator for boolean values is provided that implements deserializing and interpreting boolean values from numbers and various string encodings.

When multiple column filters have been defined (as in the above screenshot), the user can opt to have returned only those rows that match all filters or any single filter. Note that bounded ranges on a single numeric column can be defined by defining two filters (upper and lower bounds) and selecting "Match all"; similarly, disjoint open-ended ranges can be defined by selecting "Match any".

Performance considerations

HBase server configuration and tuning aside, there are two things to consider when using HBase Input. The first (scanner row caching) has already been mentioned. The second involves selecting which columns from the specified mapping to return from a query. Specifying fields in the Configure query tab will result in scans that return just those columns. Since HBase is a sparse column-oriented database, this requires that HBase check to see for each row whether it contains a specific column. More lookups equate to reduced speed, although the use of Bloom filters (if enabled on the table in question) mitigates this to a certain extent. If, on the other hand, the fields table in the Configure query tab is left blank, it results in a scan that returns rows that contain all columns that exist in each row (not only those that have been defined in the mapping). However, HBase Input will only emit those columns that are defined in the mapping being used. Because all columns are returned it means that HBase does not have to do any lookups. However, if the table in question contains many columns and is dense then this will result in more data being pushed over the network.

Metadata Injection Support (7.x and later)

All fields of this step support metadata injection. You can use this step with ETL Metadata Injection to pass metadata to your transformation at runtime.